In the first part of our series on the development of science in the 20th century, we talked about the birth of new physics: Max Planck’s quantum mechanics and Albert Einstein’s theory of relativity. The new field of quantum theory would lay the foundation for the study of radioactivity – the process of decay of the atomic nucleus, the study of which would allow people to extract colossal amounts of energy from the smallest particles of matter. The first half of the 20th century was also marked by the rapid development of medicine in the field of fighting infectious disease, which was preceded by an amazing but completely accidental discovery.

Radioactivity: rays piercing the universe

The first scientist who was able to personally observe ionizing radiation (radioactive rays) in his laboratory was German scientist Wilhelm Konrad Roentgen, head of the Institute of Physics at the University of Würtzburg. His discovery took place on November 8, 1895.

In his laboratory, Roentgen conducted an experiment on applying an electric current to electron vacuum tubes with a cathode tube inside, wanting to study the behavior of electric charges in a vacuum. When a current was applied to two lamps located opposite each other, Roentgen found that a sheet of paper covered with a thin layer of barium platinum cyanide crystals, which happened to be near the laboratory equipment, began to glow. This seemed strange, since the vacuum tubes with which the X-ray worked were shielded and simply could not transmit light. Roentgen would later find similar fluorescent properties in rock salt, uranium glass, and calcite.

While he initially thought that he had discovered a new kind of light of a spectrum which for some reason was otherwise invisible to the human eye, Roentgen quickly became convinced that this was not the case. The rays he discovered, to which he attributed the letter X (X-rays), did not have the characteristic properties of light: in addition to the fact that they passed through opaque materials, they also did not refract or reflect from reflective surfaces. X-rays also ionized the surrounding air, and he thus termed the phenomenon “ionizing radiation.”

Hoping to make practical use of the discovery, Roentgen constructed a modified version of his vacuum tube with a flat anticathode inside the lamp bulb. The new lamps allowed Roentgen to obtain a much more intense and continuous flow of ionizing radiation. Rays invisible to the eye were also able to light up film, which made Roentgen curious to see what sorts of things they could show in a picture. Just a month after the discovery of his amazing radiation, Roentgen used it to make the world’s first x-ray, photographing the palm of his wife, Anna Breta Ludwig.

Seeing her own skeleton, Roentgen’s wife exclaimed, “I saw my own death!”

What Wilhelm Roentgen observed was not really a new kind of light, but rather high-frequency electromagnetic radiation with an extremely short wavelength emanating from certain types of matter. Soon, French physicist Henri Becquerel would begin to conduct his own research into ionizing radiation. Becquerel noted that radiation could come not only with an electric current, but that it also naturally radiated from certain materials, in particular uranium salts.

The first to call this amazing phenomenon “radioactivity” was Marie Curie – a gifted student of Becquerel in the Department of Physics at the École supérieure de physique et de chimie industrielles at the University of Paris. Together with her husband, Pierre, she began to study radioactivity, which in the future would allow her to discover radium and polonium. In 1903, Marie would receive the Nobel Prize in Physics alongside her husband and her mentor, Henri Becquerel.

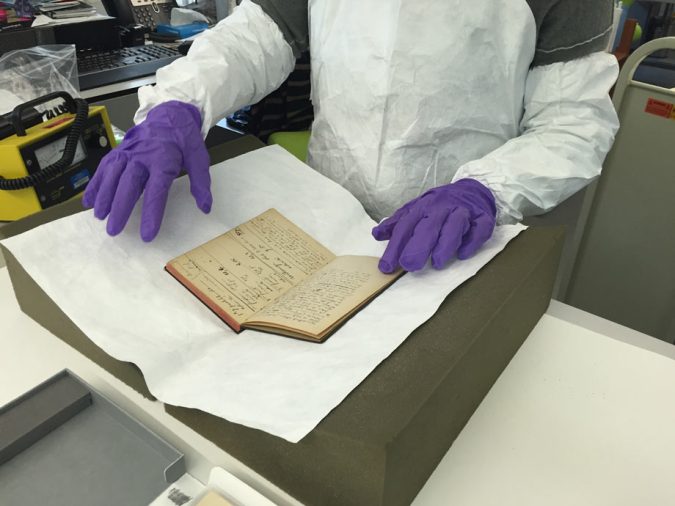

By 1902, Marie Curie became the first to artificially isolate radium, which before then had never been found in nature in its pure form. To do this, she had to process several tons of uranium resin to end up with only a tenth of a gram of pure radium. The process was not only painstaking, but also fatally dangerous to Marie’s health. The forty years she spent in constant contact with radioactive materials led to mutations that caused leukemia. Curie died of her illness in 1934, at the age of 66, but her work equipment, desk, notes, and even her cookbook still emit radiation and are stored behind a special shielding to protect those who come to see them.

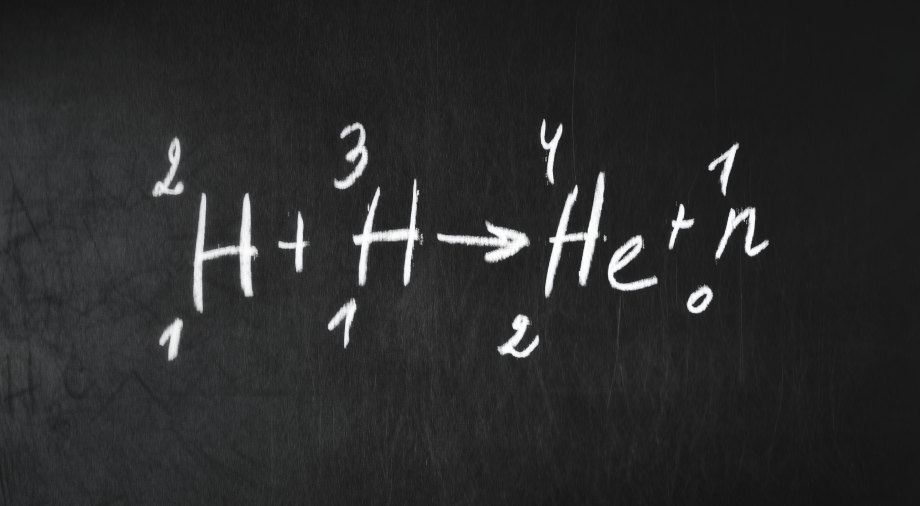

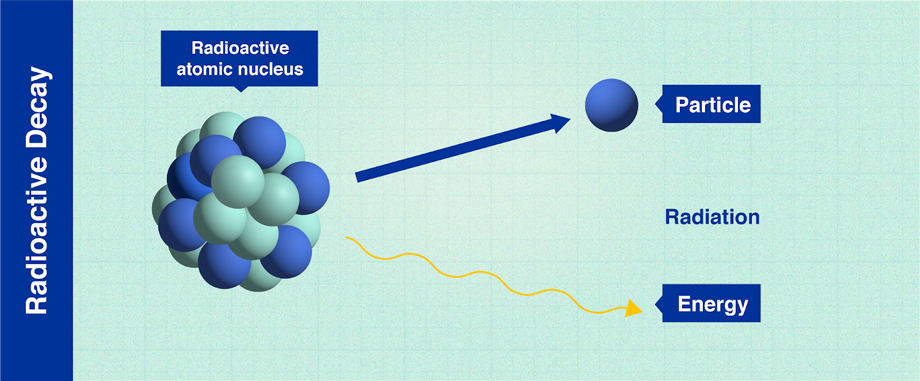

The nuclear fission of atoms was discovered in 1939 by Austrian scientists Lise Meitner and Otto Frisch. The discovery of this phenomenon, which Meitner and Frisch would call “radioactive decay,” led to the understanding of how to control this process in nuclear reactors. Thanks to these, by the middle of the 20th century, certain highly-developed countries which had mastered the technology of nuclear power generation were able to almost totally meet their electricity production needs. The mastery of radioactivity has helped humanity to solve a number of environmental problems, from clean power generation to the purification of wastewater.

(Infographic: Adriana Vargas/IAEA)

Understanding of radioactive decay (particularly of atomic half-lives) helped humanity to invent the first ultra-precise atomic clock, but also to look back into the past with the use of radiocarbon dating, which was invented in 1946 by the American physical chemist Willard Libby. The scientist came up with the idea of determining the age of materials by measuring their content of the unstable radioactive carbon isotope 14C, whose half-life was 5730 years. Using his new methodology, Libby was able to determine the age of the remains of a native North American (9,500 years old), estimated the approximate age of a fragment of a wooden oar (3,000 years old) found in Egypt, and launched a new whole scientific procedure. New standards for radiocarbon dating methods adopted in 2020 (IntCal20 and SHCal20) are able to determine the age of samples which are up to 55,000 years old.

Radiation would shape medicine not only through the development of X-ray diagnostics. Even during the First World War, Marie Curie drew attention to the fact that with the help of highly-concentrated, high-frequency ionizing radiation, it was possible to effectively fight cancer cells. Carelessly handled, radiation could bring death from cancer, but correctly applied, it could treat it.

But radioactivity was not the only tool that helped people in the 20th century fight disease. By the 1950s, another new breakthrough awaited humanity, this time in the field of bacteriology.

The Mold That Saved Millions

In 1928, Alexander Fleming, a professor of bacteriology at the St Mary’s Hospital Medical School in London, went on vacation for several weeks. Upon returning to his laboratory and discovered a strange thing. The colonies of staphylococcal bacteria with which Fleming did his research in Petri dishes had died out. The cause was a patch of a common mold, which Fleming would later name Penicillium notatum.

Fleming was surprised by the reaction of the bacteria to the substance excreted by the mold, and a year later, he described its potential therapeutic properties in the British Journal of Experimental Pathology . Unfortunately, in those days, scientists did not have methods for obtaining a stable solution containing pure penicillin from the mold juice, which is why Fleming’s transformative medical discovery would lay dormant for a long 10 years.

Photo: Bristol-Myers Squibb Corporation

In 1939, a large-scale effort began for the production of medical penicillin. At the Oxford University Laboratories, a group of scientists led by Howard Florey and Ernst Chain began work on modernizing the process of obtaining a solution of pure penicillin from mold filtrate. To do this, scientists had to process up to 500 liters of mold filtrate per week, but the end result was well worth the effort.

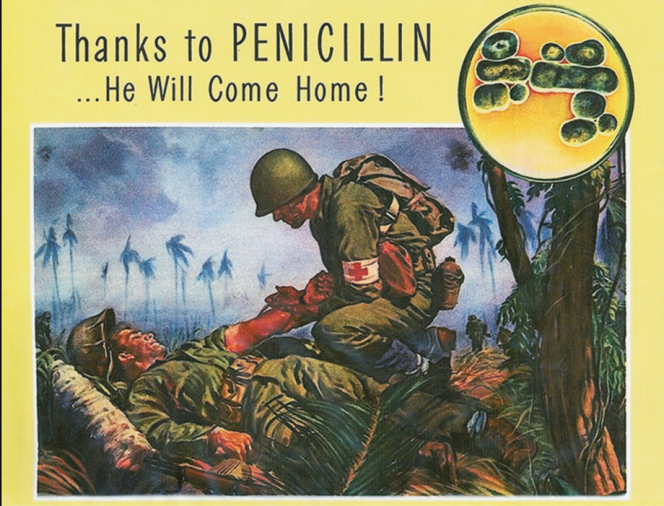

New methods for refining penicillin proposed by biochemist Edward Abraham greatly accelerated both the process itself and the quality of the drug, and in February 1941, after completing a series of clinical trials on mice, penicillin was successfully given to humans for the first time. England came close to the production of penicillin on an industrial scale, but the Second World War and the constant raids by the German Luftwaffe on Britain took their toll. Full industrial production of penicillin during the war would be established only in the United States.

During the war, the effort to produce penicillin was led by US pharmaceutical companies Merck, Pfizer, Squibb, and Abbott Laboratories. In 1944, nearly 1.7 billion doses of penicillin were produced, shooting up to 6.8 trillion units the next year.

Photo: National Museum of World War II

In 1945, Fleming (alongside Howard Florey and Ernst Chain) was awarded the Nobel Prize in Medicine “for the discovery of penicillin and its curative effect in a variety of infectious diseases.”

Despite penicillin’s excellent antibiotic propertie, its large-scale and uncontrolled use has brought a number of problems to mankind, first and foremost the the emergence of antibiotic-resistant strains of super bacteria. Today, the WHO and the UN consider these so-called “superbugs” to be one of the most serious challenges to medicine which humanity will face especially acutely by the middle of this century. It is estimated that by 2050, strains of antibiotic-resistant bacteria could cause the deaths of 10 million people annually.

The history of radioactivity, as well as the history of penicillin, are clear examples of how a technology can be both beneficial and detrimental at the same time. With such a rapid pace of development, it was vital for science to be able to produce far more predictable conclusions. For this, humanity would need the advent of computers, whose development would come to dominate the second half of the 20th century. This will be the topic of our next installation.